I frequently find myself getting quite upset when reading articles about Quantum Mechanics (QM) in the popular press and related media. This subject is very hard to write about in a way that allows the reader to grasp the elements involved without inviting misconceptions. In many cases I think that these treatments do more harm than good by misinforming more than informing. I know of no way to quantify the misinformation/information ratio but my experience as a science explainer tells me that the ratio is often significantly larger than one.

I recently came across an article that tried to tackle some very difficult topics and, in my opinion at least, failed in almost every case and spectacularly so in a few. There are so many problems with this article I had a lot of trouble deciding what to single out but I finally settled on this:

"However, due to another phenomenon called decoherence, the act of measuring the photon destroys the entanglement."

This conflation of measurement and decoherence is very common and the problems with it are subtle. This error masks so much of what is both known and unknown about the subject it is doing a disservice to the reader by hiding the real story and giving them a filter that will almost certainly distort the subject so that when more progress is made the already difficult material becomes even harder to comprehend.

So, what are "measurement" and "decoherence" in this context and what's wrong with the sentence I quoted? I'm going to try to explain this in a way that is simultaneously, accessible without lots of background, comprehensive enough to cover the various aspects, and, most importantly for me, simple without oversimplifying.

"Measurement" seems innocuous enough. The word is used in everyday language in various ways that don't cause confusion and that is part of what causes the trouble here. When physicists say "measurement" in this context they have a specific concept, and related problem, in mind. The "Measurement Problem" is a basic unresolved issue that is at the core of the reasons that there are "interpretations" of quantum mechanics. There are lots of details that I'm not mentioning but the basic issue is that quantum mechanics says that once something is measured, if it is quickly measured again, the result will be the same.

That sounds trivial. How could that be a problem? What else could happen? It is an issue because another fundamental idea in QM is that of superposition: A system can be state where there are a set of probabilities for what result a measurement will get and what actually happens is random. The details of the change from random to determined, caused by a measurement, is not part of the theory. In the interpretation that is both widely taught and widely derided, the Copenhagen Interpretation, the waveform is said to "collapse" as a result of the measurement. To say that this collapse is not well understood is a major understatement.

In summary, a quantum "measurement" can force the system to change its state in some important way. To use examples that you've probably heard: Before a measurement a particle can be both spin-up and spin-down, or at location A and location B, or the cat is both dead and alive. After the measurement the system will be in only one of those choices.

The word "decoherence" is less familiar. It is obviously related to "coherent" so it is natural to think of it as a process where something is changed so that it loses some meaning. A good example would be a run on sentence filled with adjectives and analogies that go at the concept from so many directions that meaning is lost rather than gained. That is not what decoherence is about. To explain what it is I need to provide some details of QM.

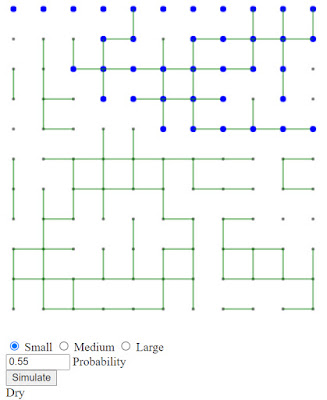

Quantum Mechanics can be described as a theory of waves. The mathematical object that encodes everything about a system is called a "wavefunction". Waves are another concept that is pretty familiar. Let's consider something that is familiar to many people: a sine wave.

There are lots of different sine waves, but they differ from each other in just three ways: Amplitude, Wavelength, and Phase. Amplitude is easy, it's just the "height" of the wave. These two waves differ only in amplitude:

Wavelength is easy as well. It is a measure of how "long" each wave is. These two waves differ only in wavelength. The red one is four times "longer" than the blue one:

Phase is less familiar. It is a "shift" in the wave. Here the blue one is "shifted" by 1/4 of a wavelength with respect to the red one. Note that it is the ratio of the wavelength to the shift that is important. Shifting a wave with a short wavelength by a fixed distance has a much bigger effect than one with a long wavelength.

From the perspective of QM these different characteristics are treated in very different ways. Without going into the reasons, here are some of the details. QM has a kind of mathematical automatic gain control. Amplitude is used to compute the probability that a measurement will have a certain value. This gain control ensures that all of those probabilities add to one. It is a bit of an overgeneralization but wavelength is

the thing that defines the essential properties of the wave. Using light as an example, the wavelength of the light determines the color and (nearly) everything else about it. Phase is where (much) of the weirdness of QM comes from. Built deeply into the structure of QM there is no way to detect the phase of a wavefunction. Any two quantum mechanical "things" that differ only in phase cannot be distinguished by any measurement of the individual "things". But when they are allowed to interact with each other the effects can be dramatic.

If two waves of the same amplitude and wavelength but different phases are allowed to interact they add together to form a wave of the same wavelength but with an amplitude between zero and double the original amplitude. Here we see what happens if a red and blue wave of the same amplitude and nearly the same phase are added:

The resulting purple wave is about twice the amplitude of the starting wave. If the phases are arranged in a particular way the result is very different.:

The two waves cancel and the result is zero. These waves are said to be "out of phase" and they essentially vanish. As noted above, amplitude relates to the probability of measurement outcomes. If the amplitude is zero, nothing happens. It is as if the object isn't there. This is the essence of the

double slit experiment. If you aren't familiar with this (or want a refresher on the subject), the link in the previous sentence gives a good introduction. Another term you will hear is that the waves "interfere" with each other and form an "interference" pattern. The different path lengths provided by the two ways light can reach a given spot on the screen smoothly change the relative phase so the result goes from being "out of phase" and dark to adding up to twice the brightness where there is constructive interference. When waves are combined and their phases have a slowly varying relationship the result is an interference pattern. A set of waves that have a fixed phase relationship are referred to as "coherent".

In this context the term "decoherence" is easier to understand. Decoherence occurs when the fixed relationship between the phases of elements of a system is lost. The phase of a wave is easily changed so this relationship is very delicate. Almost any interaction with the environment will affect it. This results in the destruction of any interference patterns in the results.

To recap, we are looking at two aspects of QM. First we have measurement. A quantum system can be constructed so that it is effectively in two states at once. This is often cited as a particle is spin-up and spin-down at the same time or Schrödinger's cat is both dead and alive. But once the measurement is made the results are stable. The system is seen to "collapse" into a known state. Next, we have decoherence where interactions with the environment cause changes in the phase of quantum waves and prevent interference patterns.

So, let's look at that sentence again: "However, due to another phenomenon called decoherence, the act of measuring the photon destroys the entanglement.". Some of the possible states of a multi-particle system have a property called "entanglement". That is another topic that richly deserves its own rant but the details don't matter here. All we need to know in this context is that when one element in the system is measured it affects the entire system. In this case the "collapse", caused by the measurement, changes the system so that it is no longer entangled. The loss of entanglement is the result of the measurement. No matter how carefully interactions with the environment, the cause of decoherence, were eliminated the entanglement would still be lost.

If the situation is as simple as I describe, with decoherence relating to phase and measurement relating to collapse why are these concepts so often conflated? I think I understand the reason.

The article quoted above is an exception but most of the time these concepts are confused it is in the context of why the quantum world and the day-to-day world seem so different. The weirdness of the quantum world is often divided into two categories. First, we have the ability of quantum objects to be in two (or more) states at once and the mysterious "collapse" to only one. Second, we have strange non-local behaviors. The double slit experiment has been done one particle at a time and the interference patterns persist. The particle, in some sense, is going through both slits at the same time. This effect, as described above, is exquisitely dependent on the system remaining coherent. It is easy to see why this effect goes away in the day-to-day world. The wavelength of an object gets smaller as it gets more massive. As noted above it is the ratio of the phase shift compared to the wave length that matters. This makes phase shift effects much larger and we get decoherence. The complexity of the day-to-day world is also important, there are so many particles that the number of possible interactions is enormous.

Less obvious is the first category, superposition and "collapse". There is a lot of work being done to try to explain why superposition and apparent "collapse" of QM aren't part of our normal day-to-day experience. It looks like decoherence is central to this situation as well. The details are subtle but it isn't, as implied by the quote at the start of this post, that decoherence causes the waveform collapse. It is that decoherence effectively removes all but one possible result from the available quantum outcomes. The term for this, explained

here, is einselection, a portmanteau of "environment-induced superselection" This

far from an easy read but it is, at least in my opinion, about as accessible as it can be. The unfortunate result is that decoherence, has become entwined (you might say entangled but the pun isn't worth it) with the concept of collapse and we get the conflation that this rant is about.

This treatment is far from complete but I hope it makes decoherence more comprehensible and shows how it is distinct from measurement and collapse, at least in the simplest cases.